Mapping ZFS disks access pattern

I was curious just how important TRIMming SSD drives is when using them with ZFS. We know, that classic NAND-based drives are experiencing performance degradation the more they are filled with data. But when using just a little area of the storage, leaving the rest untouched, would it still slow down the storage over time?

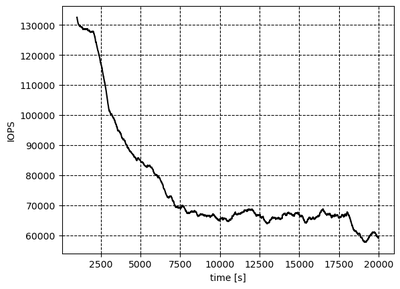

To check it, I created a zpool (without autorim enabled) on a 3.5 TB NVMe NAND drive with one 1.5 TB zvol on it. On this zvol I then ran fio for 20 000 seconds, writing random 4 kB blocks on an area of 50 GB. During this time I recorded the IOPS every second, which resulted in this graph:

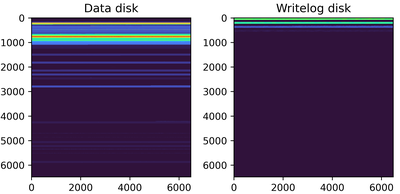

It can be seen, that using even just a small portion of the storage for extended period of time would lead to performance degradation on NAND drives. By why is that? To further investigate it, I created two 20 GB disks on a virtual machine with arch linux, ZFS and blktrace. On one disk I created a pool with 15 GB zvol on it and attached to it the second disk as a slog. Similarly, I ran fio on it, writing random 4 kB blocks on the zvol, using only 100 MB of storage area. After one hour of gathering blktrace data, I used it to create a heat map of disk sector access:

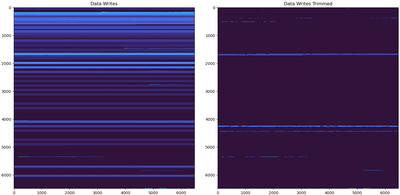

Every pixel on this map represents one disk sector. The more times it was written to, the 'hotter' it gets. It's clearly visible that ZFS writes a lot of data in various regions of the whole vdev drive. But when it comes to a slog, it behaves differently. There can be seen four continuous areas on the slog device to which data is being written (zvol used sync=always parameter), leaving the rest of the disk untouched. This show the importance of TRIMming on SSDs. When I repeated the process with autotrim enabled, the heat map with and without TRIMs accounted for looked like this:

This looks a lot nicer!